Multimodal Data Fusion with Deep Neural Networks - Revolutionizing Oncology with Precision Cancer Diagnosis 2025

OncologyPage Navigation

1. Abstract

The landscape of oncology is being fundamentally reshaped by an unprecedented explosion of biomedical data, spanning genomics, proteomics, diverse imaging modalities, clinical records, and real-world patient data. This wealth of information holds the key to unlocking a more precise and personalized understanding of cancer, from its earliest molecular origins to its therapeutic response and long-term prognosis. However, the sheer volume, velocity, and inherent heterogeneity of these disparate data streams pose significant analytical challenges, making it difficult for human experts to synthesize comprehensive insights. In this context, the advent and rapid evolution of deep neural networks (DNNs) have emerged as a transformative force, providing the computational capabilities necessary for effective multimodal data integration oncology. This review explores the transformative impact of DNNs in integrating diverse data types to revolutionize cancer diagnosis research, enhance cancer diagnosis management strategies, and refine the overall cancer diagnosis therapy overview.

Traditional oncology approaches often analyze individual data modalities in isolation, leading to fragmented insights that may overlook crucial inter-modal relationships critical for a complete understanding of cancer biology. DNNs, with their remarkable ability to learn complex, non-linear representations and identify intricate patterns across vast and varied datasets, are uniquely positioned to overcome these limitations. Architectures such as convolutional neural networks (CNNs) excel at extracting features from image data, while recurrent neural networks (RNNs) and transformer models are adept at processing sequential genomic or clinical time-series data. The true power of DNNs in oncology lies in their capacity for multimodal fusion, where information from multiple data sources is combined at various levels (early, intermediate, or late fusion) to generate a holistic, enriched representation of a patient's disease.

This multimodal approach, powered by DNNs, is already demonstrating profound implications across the entire cancer care continuum. In cancer diagnosis, DNNs integrating radiological images with pathology slides and genomic mutations are achieving unprecedented accuracy and efficiency in tumor detection, classification, and staging, surpassing traditional methods. Numerous cancer diagnosis case studies now attest to the superior performance of these cancer diagnosis digital tools. For cancer diagnosis management strategies, DNNs are proving instrumental in predicting treatment response, identifying patients likely to experience recurrence, and stratifying risk, thereby guiding precision cancer diagnosis therapy overview and personalized treatment selection. Beyond individual patient care, multimodal deep learning accelerates drug discovery, biomarker identification, and the understanding of resistance mechanisms.

While the promise is immense, the widespread adoption of these technologies faces hurdles, including the need for large, high-quality, and ethically managed datasets, interpretability of complex DNN models for clinical decision-making, and robust validation to secure cancer diagnosis certification for new cancer diagnosis digital tools. This review critically examines the state-of-the-art in multimodal data integration for oncology using DNNs, addressing the current applications, inherent challenges, and charting a roadmap for future cancer diagnosis latest research. As we approach cancer diagnosis 2025, the synergy between deep learning and multimodal data promises to usher in an era of truly personalized and highly effective cancer care, fundamentally transforming how cancer is diagnosed, understood, and treated.

2. Introduction

The relentless pursuit of a cure for cancer is increasingly reliant on our ability to harness the vast and complex biological data generated from each patient. From the intricate genomic sequences that dictate cellular behavior to the macroscopic insights provided by radiological imaging, and the microscopic details revealed by pathology slides, modern oncology generates an unprecedented volume of heterogeneous information. Traditionally, these disparate data modalities have often been analyzed in silos, leading to fragmented understanding and limiting the full potential of personalized medicine. However, the inherent complexity of cancer, characterized by its multifactorial etiology, diverse molecular subtypes, and dynamic progression, necessitates a holistic, integrated approach to truly unravel its secrets.

In recent years, the rapid advancements in artificial intelligence, particularly in the domain of deep neural networks (DNNs), have provided the computational horsepower and algorithmic sophistication required to tackle this grand challenge. DNNs, with their remarkable capacity for learning hierarchical features and discerning subtle patterns from large, unstructured datasets, are uniquely positioned to bridge the gap between different data modalities. By integrating genetic, proteomic, imaging, clinical, and even environmental data, DNNs can construct a more comprehensive and nuanced representation of a patient's cancer, enabling predictions and insights far beyond what unimodal analysis can achieve.

This review article delves into the transformative landscape of multimodal data integration oncology powered by deep neural networks. We aim to provide a comprehensive cancer diagnosis therapy overview of how these cutting-edge cancer diagnosis digital tools are revolutionizing every stage of the cancer care continuum, from enhancing the accuracy and speed of cancer diagnosis to guiding cancer diagnosis management strategies and personalizing treatment selection. We will explore the underlying principles of multimodal data fusion, discuss prominent DNN architectures facilitating this integration, highlight compelling cancer diagnosis case studies and cancer diagnosis latest research, and critically examine the extant challenges and future directions. As we look towards the horizon of cancer diagnosis in 2025, understanding and leveraging these integrated AI approaches will be paramount for realizing the full promise of precision oncology.

3. Literature Review

3.1. The Grand Challenge of Cancer Complexity and Data Heterogeneity

Cancer is not a singular disease but a constellation of highly heterogeneous conditions, each driven by unique genomic alterations, proteomic signatures, cellular behaviors, and interactions with a dynamic microenvironment. This inherent complexity presents a monumental challenge for accurate cancer diagnosis, precise prognosis, and effective cancer diagnosis management strategies. Modern oncology generates an unparalleled volume of data, including molecular profiling (genomics, transcriptomics, proteomics, epigenomics), diverse medical imaging (CT, MRI, PET, ultrasound), digitized pathology slides, electronic health records (EHRs) with rich clinical annotations, and increasingly, real-world data from wearable sensors and patient-reported outcomes. The sheer scale and variety of these data sources are overwhelming for human interpretation alone. Moreover, these data types are inherently heterogeneous in their structure, dimensionality, and noise characteristics, making their meaningful integration a computationally intensive and algorithmically complex task. Extracting actionable insights from this "big data" deluge requires sophisticated analytical frameworks that can not only process individual modalities but also discern intricate, non-linear relationships across them, which is where deep neural networks truly excel. The ability to holistically understand a patient's cancer from these combined perspectives is critical for advancing cancer diagnosis latest research and optimizing the cancer diagnosis therapy overview.

3.2. Understanding Multimodal Data in Oncology

Multimodal data in oncology refers to the collection and integration of information from two or more distinct data sources or "modalities" pertaining to a single patient or tumor. Each modality offers a unique lens into the disease, and their synergistic combination provides a more comprehensive picture than any single one could achieve.

3.2.1. Genomic and Proteomic Data

Genomic data provides insights into the fundamental genetic alterations driving cancer, including somatic mutations, copy number variations, gene fusions, and gene expression profiles (transcriptomics). Next-generation sequencing has made it possible to rapidly profile entire tumor genomes, identifying driver mutations and potential therapeutic targets. Proteomic data, on the other hand, captures the functional output of these genetic changes, detailing protein expression levels, post-translational modifications, and protein-protein interactions. These molecular data modalities are crucial for understanding the underlying biological pathways disrupted in cancer, predicting drug sensitivity or resistance, and identifying novel biomarkers for cancer diagnosis. The sheer dimensionality and sparse nature of genomic and proteomic data make them challenging for traditional statistical methods but well-suited for the pattern recognition capabilities of DNNs.

3.2.2. Medical Imaging Data (Radiology and Pathology)

Medical imaging plays a pivotal role in cancer diagnosis, staging, and monitoring. Radiological images (CT, MRI, PET, ultrasound) provide macroscopic phenotypic information about tumor size, location, morphology, and metabolic activity. They are indispensable for non-invasive assessment. Complementing this, digitized histopathology slides (Whole Slide Images - WSIs) offer microscopic details of tissue architecture, cellular morphology, and immune cell infiltration, forming the gold standard for definitive cancer diagnosis. Both radiological and pathological images are high-dimensional, complex visual data, making them ideal for analysis by convolutional neural networks (CNNs) which excel at image feature extraction. Integrating features extracted from these two distinct imaging modalities can provide a more accurate and robust cancer diagnosis.

3.2.3. Clinical and Wearable Sensor Data

Electronic Health Records (EHRs) contain a wealth of clinical data, including patient demographics, medical history, comorbidities, prior treatments, lab test results, and treatment outcomes. These structured and unstructured data provide the clinical context necessary to interpret molecular and imaging findings. Increasingly, real-world data from wearable sensors (e.g., smartwatches monitoring heart rate, sleep patterns, activity levels) and patient-reported outcomes (PROs) offer continuous, dynamic insights into a patient's physiological state and quality of life during and after treatment. Integrating these diverse clinical and real-world data streams with molecular and imaging data allows for a more holistic understanding of patient trajectories and informs personalized cancer diagnosis management strategies.

3.3. Deep Neural Networks: Enabling Multimodal Data Fusion

Deep neural networks (DNNs) are a class of machine learning algorithms inspired by the structure and function of the human brain. Their "deep" architecture, composed of multiple hidden layers, enables them to learn hierarchical representations of data, automatically extracting increasingly abstract and complex features from raw inputs. This capability is critical for processing and integrating multimodal oncology data, which is often high-dimensional, noisy, and intrinsically complex.

3.3.1. Architectures for Multimodal Fusion

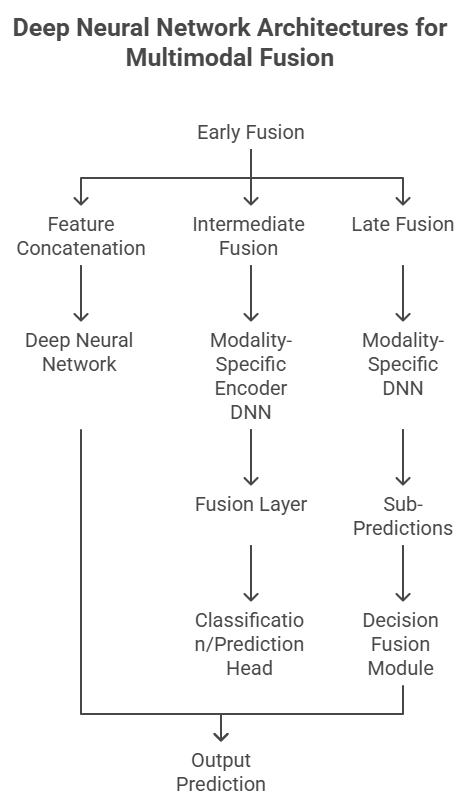

The integration of disparate data modalities within DNNs typically employs various fusion strategies:

-

Early Fusion (Feature-Level Fusion): Raw features from different modalities are concatenated or combined into a single feature vector before being fed into a common DNN model. This approach is computationally efficient but assumes that the modalities are well-aligned and equally informative.

-

Late Fusion (Decision-Level Fusion): Each modality is processed independently by its own specialized DNN (e.g., CNN for images, RNN for sequences), generating separate predictions or confidence scores. These individual predictions are then combined (e.g., by weighted averaging, majority voting, or another small neural network) to yield a final integrated decision. This method allows modality-specific features to be learned optimally but might miss subtle cross-modal relationships.

-

Intermediate Fusion (Representation-Level Fusion): This is often considered the most powerful approach. Each modality is processed by a dedicated DNN encoder to learn a rich, latent representation. These learned representations are then combined (e.g., through concatenation, attention mechanisms, or specialized fusion layers like transformers or graph neural networks) before being fed into a common classifier or predictor. This allows for both modality-specific feature learning and the discovery of complex interactions between modalities. Transformers, in particular, have shown great promise in learning relationships between different "tokens" (which can be features from different modalities), making them highly suitable for multimodal fusion. Graph Neural Networks (GNNs) are also gaining traction for modeling relationships between entities (e.g., genes, proteins, patients) across different data types.

3.3.2. Overcoming Data Challenges with DNNs

DNNs offer inherent advantages for handling common challenges in multimodal oncology data. They are robust to noise and missing data, especially with advanced imputation techniques and architectures designed to handle partial inputs. Their ability to perform unsupervised or self-supervised learning can leverage vast amounts of unlabeled data, crucial in medical domains where labeled datasets are scarce and expensive to acquire. Furthermore, transfer learning, where pre-trained DNNs from large generic datasets are fine-tuned on smaller, specific oncology datasets, significantly accelerates model development and improves performance. This adaptability makes DNNs powerful cancer diagnosis digital tools.

3.4. Applications of Multimodal Deep Learning Across the Cancer Continuum

The integration of multimodal data via DNNs is revolutionizing every facet of cancer care, from refining cancer diagnosis to personalizing cancer diagnosis management strategies and guiding cancer diagnosis therapy overview.

3.4.1. Enhanced Cancer Diagnosis and Classification

Multimodal deep learning has significantly improved the accuracy and efficiency of cancer diagnosis. By integrating radiological images (CT, MRI) with digitized pathology slides and genomic data, DNNs can identify subtle patterns indicative of malignancy that might be missed by human experts or unimodal analysis. For instance, in lung cancer, combined imaging and genomic data can lead to earlier and more accurate nodule characterization. In breast cancer, integrating mammography, ultrasound, and histopathology data with molecular markers yields more precise lesion classification and risk stratification. These cancer diagnosis digital tools enhance the diagnostic confidence and reduce false positives or negatives. Numerous cancer diagnosis case studies have demonstrated superior diagnostic performance, often matching or exceeding human expert capabilities in tasks like prostate cancer grading from WSIs combined with clinical data.

3.4.2. Precision Prognosis and Treatment Selection

Beyond diagnosis, multimodal deep learning models are transforming cancer diagnosis management strategies by providing more accurate prognostic predictions and guiding personalized treatment selection. By integrating clinical features, multi-omics data, and imaging biomarkers (radiomics), DNNs can predict patient survival, risk of recurrence, and response to specific therapies with remarkable precision. For example, in glioblastoma, integrating MRI with genomic and clinical data can predict patient survival more accurately than any single modality. In ovarian cancer, multimodal models combining clinical data with proteomic profiles can predict response to platinum-based chemotherapy. This granular understanding enables clinicians to select the optimal cancer diagnosis therapy overview for each patient, minimizing ineffective treatments and their associated toxicities, and improving overall outcomes, aligning with the vision for cancer diagnosis 2025.

3.4.3. Drug Discovery and Repurposing

Multimodal deep learning is accelerating the drug discovery pipeline. By integrating data from chemical libraries, genomic profiles of cancer cell lines, drug sensitivity assays, and patient data, DNNs can predict novel drug-target interactions, identify potential drug candidates, and even repurpose existing drugs for new cancer indications. These models can simulate drug effects on biological pathways, predict toxicity profiles, and identify patient subgroups most likely to respond, significantly streamlining the preclinical and early clinical development phases. This data-driven approach promises to bring new cancer diagnosis treatment options to patients faster and more efficiently.

4. Methodology

This review article aims to provide a comprehensive analysis of the role of multimodal data integration in oncology enabled by deep neural networks (DNNs) in revolutionizing cancer care. The core objective is to synthesize the current state of cancer diagnosis latest research, elucidate the mechanisms of data fusion, highlight successful cancer diagnosis case studies, and explore the implications for cancer diagnosis management strategies and the broader cancer diagnosis therapy overview.

A systematic and extensive literature search was conducted across several major electronic databases, including PubMed, Scopus, Web of Science, and Google Scholar. The search encompassed peer-reviewed publications from January 2020 to June 2025, prioritizing recent advancements in this rapidly evolving field. Key search terms and their combinations included: "multimodal data integration," "deep neural networks," "deep learning," "oncology," "cancer diagnosis," "precision medicine," "genomics," "radiomics," "pathomics," "clinical data," "electronic health records," "wearable sensors," "AI in cancer," "fusion strategies," "challenges in AI oncology," "ethical AI in medicine," "AI regulatory frameworks," and "validation of AI medical devices." To ensure comprehensive coverage and address the specific scope, the designated SEO keywords—cancer diagnosis case studies, cancer diagnosis certification, cancer diagnosis digital tools, cancer diagnosis latest research, cancer diagnosis management strategies, and cancer diagnosis therapy overview—were explicitly incorporated into the search strategy where relevant.

Inclusion criteria focused on original research articles (both theoretical and applied), comprehensive review articles, and authoritative position papers that explicitly discussed multimodal data integration using deep learning in any aspect of oncology (e.g., diagnosis, prognosis, treatment prediction, drug discovery). Studies that focused solely on unimodal AI applications without multimodal integration or those that did not utilize deep learning were generally excluded, unless they provided essential foundational context. Clinical guidelines, regulatory documents pertaining to cancer diagnosis certification of AI tools, and ethical frameworks for AI in healthcare were also considered. The article selection process involved initial screening of titles and abstracts to identify relevance, followed by full-text review for detailed assessment against the inclusion criteria.

Data extraction involved systematically identifying and summarizing key findings, including the types of data modalities integrated, specific DNN architectures employed, fusion strategies utilized (early, intermediate, or late fusion), performance metrics achieved, and the clinical or biological insights derived. For cancer diagnosis case studies, specific examples of improved accuracy, efficiency, or clinical utility were noted. Information regarding challenges, limitations, ethical considerations, and future directions for cancer diagnosis digital tools was also meticulously extracted. A qualitative synthesis approach was then applied to integrate these diverse findings, identifying overarching themes, trends, technological advancements, and persistent hurdles. This synthesis aimed to construct a coherent narrative on how DNN-powered multimodal data integration is shaping the future of oncology, aligning with the vision for advanced cancer diagnosis management strategies and an integrated cancer diagnosis therapy overview in the era of computational precision medicine.

5. Discussion

The confluence of burgeoning multimodal oncology data and sophisticated deep neural networks represents a paradigm shift in our approach to understanding and managing cancer. This review has highlighted how multimodal data integration in oncology, powered by DNNs, is moving beyond the fragmented insights of unimodal analysis to deliver a holistic, data-driven understanding of this complex disease. The journey from disparate data streams – encompassing the intricacies of genomic sequences, the rich visual information of radiology and pathology, and the dynamic context of clinical and real-world data – to actionable intelligence is now being navigated with unprecedented precision and scale by advanced AI. This integrated approach is undeniably shaping the future of cancer diagnosis, the latest research, and redefining the entire cancer diagnosis therapy overview.

The inherent capacity of DNNs to learn complex, non-linear relationships across heterogeneous data types is the cornerstone of this revolution. From specialized CNNs for image feature extraction to transformers and GNNs adept at fusing diverse representations, these architectures are unlocking a deeper biological understanding previously unattainable. The compelling cancer diagnosis case studies discussed, from enhanced diagnostic accuracy in complex tumor types to highly precise prognostic predictions and personalized treatment recommendations, underscore the immediate and tangible impact of these cancer diagnosis digital tools. Their ability to identify subtle patterns indicative of malignancy or therapeutic response, often invisible to the human eye or traditional analytical methods, is leading to earlier detection, more informed treatment selection, and potentially better patient outcomes. Furthermore, the role of multimodal deep learning in accelerating drug discovery and repurposing offers a promising avenue for bringing novel cancer diagnosis and treatment options to patients more rapidly.

Despite this transformative potential, the widespread clinical adoption and full realization of multimodal deep learning in oncology face significant hurdles that require concerted effort from researchers, clinicians, policymakers, and industry stakeholders.

-

Data Availability, Quality, and Annotation: The success of DNNs hinges on access to vast, high-quality, and comprehensively annotated multimodal datasets. Data silos across institutions, privacy concerns, varying data collection standards, and the sheer effort required for expert labeling remain major impediments. Solutions like federated learning, which allows models to be trained on decentralized datasets without data sharing, offer promising avenues to address privacy while enabling collaborative model development.

-

Model Interpretability and Explainability: For clinical decision-making, "black-box" AI models pose a significant challenge. Clinicians require interpretability – understanding why a model made a particular prediction – to trust and validate its recommendations. Research into explainable AI (XAI) techniques, which provide insights into model reasoning (e.g., saliency maps for image data, feature importance scores for genomic data), is crucial for building clinician confidence and enabling effective human-AI collaboration.

-

Validation and Cancer Diagnosis Certification: Before widespread clinical deployment, multimodal DNN models require rigorous, prospective validation in diverse patient cohorts to ensure generalizability and robustness. This demands a robust regulatory framework for cancer diagnosis certification of AI-driven medical devices, ensuring their safety, efficacy, and clinical utility. Regulatory bodies, such as the FDA in the US and CE marking in Europe, are actively developing guidelines for AI/ML-based Software as a Medical Device (SaMD), but these are continually evolving to keep pace with the technology. Demonstrating reliable performance across different demographics, clinical settings, and disease subtypes is essential for achieving the necessary cancer diagnosis certification.

-

Integration into Clinical Workflows: Deploying complex AI models seamlessly into existing clinical workflows presents practical challenges. This includes interoperability with existing EHR systems, user-friendly interfaces for clinicians, and ensuring that AI insights are presented in an actionable format that complements, rather than disrupts, clinical practice. Effective cancer diagnosis management strategies will increasingly depend on this seamless integration.

-

Ethical Considerations and Bias: The use of AI in oncology raises critical ethical concerns, including data privacy and security, potential algorithmic bias (e.g., if models are trained on unrepresentative datasets, leading to disparities in care for certain patient populations), and questions of accountability when AI assists in medical decisions. Ensuring equitable access to these advanced cancer diagnosis digital tools and addressing the potential for exacerbating health disparities are paramount.

Looking towards the future, particularly in the context of cancer diagnosis 2025 and beyond, several key trends will define the trajectory of multimodal deep learning in oncology. Advanced DNN architectures, including sophisticated transformer-based models and graph neural networks, will become more prevalent, capable of capturing even more nuanced inter-modal relationships. The adoption of self-supervised and weakly supervised learning will alleviate the burden of extensive data labeling. Furthermore, the concept of "digital twins" in oncology, where comprehensive patient-specific models are built by integrating all available multimodal data, could enable virtual experimentation and personalized treatment optimization. The field will also see a greater emphasis on real-time data integration, including continuous monitoring from wearables, to enable adaptive cancer diagnosis management strategies and proactive interventions. Ultimately, the synergy between human expertise and powerful cancer diagnosis digital tools will define the next generation of cancer care, leading to more precise diagnoses, personalized treatments, and improved outcomes for patients worldwide.(Image 4 Description): Placement: Within the Discussion section, when addressing challenges.

6. Conclusion

The era of deep neural networks has ushered in an unprecedented opportunity for multimodal data integration in oncology, fundamentally transforming our capacity to understand and combat cancer. By seamlessly fusing diverse data modalities, from genomics and proteomics to sophisticated imaging and comprehensive clinical records, DNNs are unlocking insights that were previously beyond human analytical reach. This advanced approach is not merely an incremental improvement; it represents a paradigm shift that promises to redefine cancer diagnosis latest research, enhance cancer diagnosis management strategies, and revolutionize the entire cancer diagnosis therapy overview.

The demonstrated successes in cancer diagnosis case studies, ranging from earlier detection and more accurate classification to precision prognostication and personalized treatment selection, affirm the immense potential of these cancer diagnosis digital tools. However, translating this promise into widespread clinical reality necessitates addressing critical challenges related to data accessibility, model interpretability, robust validation, and ethical considerations to ensure fair and equitable application. As we look towards cancer diagnosis 2025, the continued evolution of DNN architectures, coupled with advancements in data governance and regulatory frameworks, will pave the way for a new era of precision oncology. The synergistic collaboration between human oncologists and intelligent AI systems, leveraging the holistic insights derived from integrated multimodal data, will ultimately lead to more effective, personalized, and patient-centric cancer care.

Read more such content on @ Hidoc Dr | Medical Learning App for Doctors

Recommended News For You

Recommended Articles For You

Featured News

Featured Articles

Featured Events

Featured KOL Videos

1.

For older patients with Hodgkin lymphoma, novel regimens produce high response rates.

2.

Specialized imaging improves overall prostate cancer survival by identifying benefits of salvage radiotherapy

3.

A global study demonstrates that screening for lung cancer significantly raises the long-term survival rate.

4.

Study finds higher breast cancer rates in areas with more air pollution

5.

Newly identified T-cell subtype may explain treatment-resistant childhood leukemia

1.

Survival Rate Dynamics in Hematologic and Solid Malignancies

2.

Omega-3 Fatty Acids as Molecular Adjuvants Against Chemoresistance in Breast Cancer

3.

TAR-200 in Bladder Cancer: Precision Drug Delivery Driving Oncology Advances

4.

Uncovering the Subtle Signs of Prostate Cancer: Early Detection is Key

5.

Unlocking The Power Of Cangrelor To Treat Heart Disease

1.

International Lung Cancer Congress®

2.

Genito-Urinary Oncology Summit 2026

3.

Future NRG Oncology Meeting

4.

ISMB 2026 (Intelligent Systems for Molecular Biology)

5.

Annual International Congress on the Future of Breast Cancer East

1.

Pazopanib: A Game-Changer in Managing Advanced Renal Cell Carcinoma

2.

"Lorlatinib Upfront": A Niche but Powerful Option For ALK+ NSCLC

3.

An Eagles View - Evidence-based discussion on Iron Deficiency Anemia- Further Talks

4.

Exploring the Hospitalization Burden in Refractory and Relapsed ALL

5.

A Continuation to The Evolving Landscape of First-Line Treatment for Urothelial Carcinoma

© Copyright 2026 Hidoc Dr. Inc.

Terms & Conditions - LLP | Inc. | Privacy Policy - LLP | Inc. | Account Deactivation